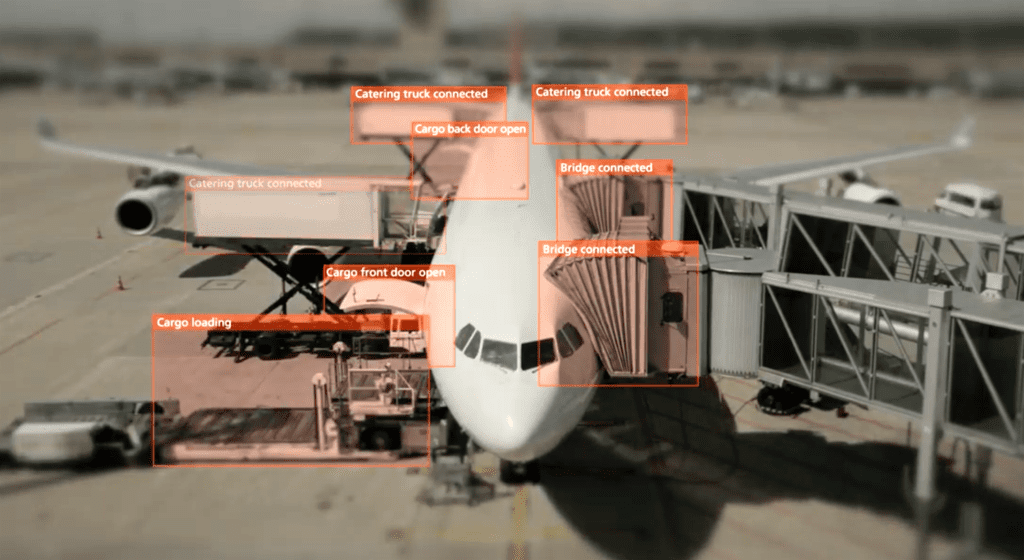

Seattle’s Tacoma International Airport will rollout the use of artificial intelligence at 85 of its aircraft stands by the first quarter of 2021. Photo: Assai

Neural networks are starting to see some of the earliest forms of development adoption and potential for expansion in applications across multiple segments of aviation for electronic systems both on and off board aircraft.

What is a Neural Network?

Neural networks are a sub-class of systems within the overall field of machine learning. Experts define neural networks as a computational model, consisting of learning algorithms that function similar to the way neurons within the human brain communicate through synapses to help enable normal bodily functions.

NVIDIA, known for supplying computers for for autonomous cars and drones, defines the term as “a biologically inspired computational model that is patterned after the network of neurons present in the human brain” and “can also be thought of as learning algorithms that model the input-output relationship.”

A neural network can be trained to understand the data that it is continuously fed, or input, and can then process and generate intelligent decisions or answers to complex problems that engineers have designed the neural network to solve, or output. Under this input-to-output method, the neural network uses what’re known as neural layers, existing in three different types.

These include an initial input layer where data is initially injected into the neural network, intermediate or hidden layers where computation of the data in between the input and output layers occurs, and and output layer that produces results in the form of actionable information, according to a 2017 MIT article explaining how neural networks are expanding in functionality published by Lawrence Hardesty, a science writer for Amazon.

The artificial intelligence and machine learning engineering development community has also classified two primary forms of neural networks. A feedforward neural network is a simpler version where information and data is being input, transformed by neural layers and output as information in a one-way forward-facing cycle. Another, more advanced form is a recurrent neural network, that uses memory and feedback loops, functioning in a way where it continuously injects key data and events that it has learned from within a more dynamic process.

A schematic overview of how a basic neural network functions. Photo: NVIDIA

Enabling elements for neural networks of today are based on the type of cutting-edge graphics processors units (GPUs) and processors being developed by companies such as NVIDIA, Intel and NXP, among others. In May 2019, Intel published a benchmark demonstrating a comparison of ResNet-50 using the latest generation of Intel’s Xeon Scalable processors, against NVIDIA’s Tesla V100, confirming ResNet-50’s ability to use deep learning software and achieve a throughput of 7878 images per second on its Xeon Platinum 9282 processors.

“We achieved 7878 images per second by simultaneously running 28 software instances each one across four cores with batch size 11. The performance on NVIDIA Tesla V100 is 7844 images per second and NVIDIA Tesla T4 is 4944 images per second per NVIDIA’s published numbers as of the date of this publication,” Intel wrote in the benchmark.

Neural network features were also a key aspect of the debut of NXP Semiconductors i.MX 8M Plus application processor at the annual Consumer Electronics 2020 show in Las Vegas. NXP indicates that this is the first i.MX family to feature a dedicated neural processing unit capable of learning and inferring inputs with almost no human intervention.

Research and development around the integration of neural networks into aeronautical systems and networks can be found as far back as the early to mid 1990s. As an example, a 1996 paper published by Charles C. Jorgensen for the NASA Ames Research Center describes the advantages of a neural network’s ability to deal with nonlinear and adaptive control requirements, parallel computing and function mapping accuracy to be advantageous in “including automated docking of spacecraft, dynamic balancing of the space station centrifuge, online reconfiguration of damaged aircraft, and reducing cost of new air and spacecraft designs.”

Aerospace Adoption of Neural Networks

Developments in recent months at some of the largest names in aerospace along with up and coming providers of artificial intelligence and machine learning platform have shown how the industry will adopt neural networks in the near future.

Airbus, and its Future Combat Air System (FCAS) development partner Dassault Aviation, are targeting the use of neural networks as a key enabler of the next-generation air combat development program involving France, Germany and Spain to develop a system of fully automated remote air platforms and sixth-generation fighter jets.

During a May 14 virtual working group meeting discussing FCAS between Airbus and the Germany-based Fraunhofer Institute for Communication, Information Processing and Ergonomics FKIE, the use of neural networks was discussed. Thomas Grohs, chief architect of FCAS at Airbus Defense and Space told the group that he is training a neural network for use in the next generation air combat system as a way of eliminating machine errors. Grohs is also working with the group to develop ethical guidelines for the use of neural networks in fighter jets and drones.

“I have to make the system flexible from a neural network point of design because I need to train such neural networks on their specific behavior,” Grohs said. “However, this behavior may differ from the different users that may use the equipment from their ethical understanding. This is for me then driving a design requirement that I have to make the system modular with respect to neural network implementation, that those are loadable, pending one that uses this from his different ethical understanding.

Assaia, a Zurich-based supplier of artificial intelligence solutions, uses image recognition algorithms powered by NVIDIA’s GPUs in and around airport terminals to capture and train a neural network on video of airplane turnarounds, to help airlines and airplane service or maintenance services vendors eliminate actions or activities on the airport surface that might lead to delays.

Their neural network generates timestamps associated with each individual airplane turnaround activity captured on video, including baggage transfers, cleaning, catering and boarding among other processes. The timestamps are then consumed by machine learning algorithms and fused with other relevant data sources to predict the timing of key events, such as refueling, during an aircraft turnaround process. In May, Seattle Tacoma International Airport signed an agreement with Assaia to start deploying their GPUs throughout the airport.

Neural network-powered image recognition is also occurring at Heathrow International Airport, where Searidge’s Aimee artificial intelligence and machine learning platform uses a network of high fidelity cameras aligning the runway to monitor approaches and landings to alert controllers as to when the runway is safely cleared for the next arrival.

At Heathrow, Aimee’s image segmentation neural network is using archived video footage from the cameras that include live images of aircraft arriving and crossing over a runway threshold at which point the next aircraft is safe to land. NATS, the air navigation service provider (ANSP) for the U.K., wants to use the neural network of aircraft arrivals to assist controllers at Heathrow when they’re unable to physically see the runway.

Air traffic controllers at London Heathrow Airport are evaluating the use of an artificial intelligence and machine learning platform, Aimee, to assist with aircraft arrivals in low visibility conditions. Photo: NATS UK

“At Heathrow Aimee runs on GPU workstations to accelerate the image segmentation Neural Network. The outputs of the Aimee sub-systems can then either be displayed on Searidge’s CWP (as is the case at LHR) or fed into external systems,” Marco Rueckert, head of innovation for Searidge Technologies said.

The near term potential for the use of neural networks in aircraft systems took a major step forward April, with the publishing of a joint report by EASA and Daedalean addresses the challenges posed by the use of Neural Networks in aviation. Daedalean, also based in Zurich, Switzerland, has nearly 40 software engineers, avionics specialists and pilots working on what it believes will be the aviation industry’s first autopilot system to feature deep convolutional feed forward neural networks.

Specific near term use cases, system architectures and aeronautical data processing standards for achieving aircraft certification are defined in the report. The company has been actively flight testing the use of a neural network for visual landing guidance in air taxis, drones and a Cessna 180 over the last year. In 2019, Melbourne, Florida-based avionics maker Avidyne began flight testing the use of Daedalean’s neural networks integrated into cameras and a linked autopilot system. Honeywell Aerospace also signed a technological partnership with the company develop systems for autonomous takeoff and landing, GPS-independent navigation and collision avoidance.

Image recognition, segmentation and data processing for visual landing guidance is the most near term use case defined by Daedalean in their joint report published by EASA. The actual function of the neural network is described as a form of object detection where images being analyzed by the network are determined to be the four corners or outline of a runway that is safe to land on.

Daedalean’s next goal is to release a design assurance level (DAL) C version of its autopilot system by 2021, while continuing work on an eventual DAL-A version.